Your content is being discovered, evaluated and recommended by systems your analytics can’t see. Large language models and conversational AI provide answers, compare suppliers and guide buyer choices, often without sending a clear referral. For brands that sell considered, high-value products and services, that creates a measurable blind spot at the point where customers are weighing options and building confidence.

This article explains what’s changing, why traditional attribution fails, and what practical steps you can take now to restore visibility and make better decisions (we’ve focused on GA4 for example purposes).

Why traditional attribution is failing

Most analytics tools were built for clicks, referrers and last-touch logic. They assume the user journey passes through identifiable signals: a search result, a social link, an ad. Language models change that pattern.

LLMs such as ChatGPT, Perplexity and Claude blend information from many sources and often present answers without linking back to original pages. When they do provide links, the conversational interface can strip or alter referrer data. This results in visits via AI interactions frequently showing up as direct or organic traffic or vanishing from attribution entirely.

This matters most for considered purchases. Senior decision‑makers may use an LLM to shortlist suppliers, returning later to visit and convert. Under a last‑click model the AI interaction that built confidence gets no credit. Popular analytics platforms (such as Adobe Analytics) have shared guidance on detecting AI and bot traffic, but detection alone doesn’t fix attribution, you’ll need process and measurement changes to convert detection into insight.

What you can measure right now

Visibility doesn’t require a ground‑up rebuild. It requires reconfiguring tools and adding server‑side insight.

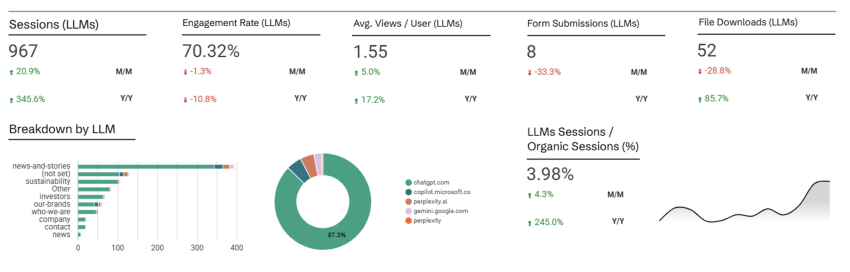

1. Create an AI channel in GA4

Define a distinct channel for AI and conversational referrals. Use referrer patterns and known agent strings to isolate traffic from platforms and crawlers. We’ve created a bespoke GA4 LLM template at 7DOTS (which populates Google Looker Studio) and provides a practical starting point for building consistent channel definitions (and insight).

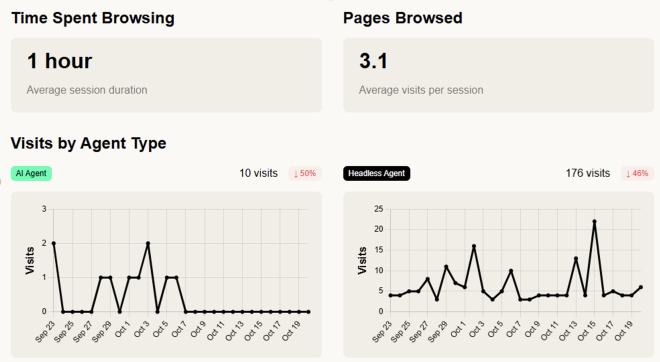

2. Use server‑side logs

Server logs capture every request, including bot and crawler activity that front‑end analytics might miss or filter. Regular log analysis reveals which AI agents (for example GPTBot or ClaudeBot) touch which pages and how often. That helps you understand what LLMs are indexing and where your content contributes to model answers. We’ve been testing Dark Visitors and been pretty impressed.

3. Inspect user‑agent and referrer strings

Some conversational interfaces pass identifiable referrers when users click through. Perplexity and similar platforms can appear in referrer data under those conditions. Tracking those patterns manually at first helps you build the rules you’ll automate later.

4. Monitor multi‑model visibility

Your audience likely doesn’t use a single AI. They probably switch between models and interfaces. Purpose‑built AI visibility tools that track brand mentions across multiple LLMs give you a broader view than single‑model checks or manual prompt testing.

Be aware though that there’s still very little data provided by AI / LLM platforms, as a result it’s very much outside-in (bulk prompting and analysis of results).

The dual nature of LLM traffic

Treat LLMs as both a competitor and a conduit.

On one hand, conversational answers can satisfy intent without creating a site visit. That reduces measurable top‑of‑funnel traffic for content that used to attract search clicks.

On the other hand, LLMs surface brands that would not have appeared in a traditional search result and can pre‑qualify users. In considered‑purchase markets this second effect is powerful: an AI answer that includes your company reduces the Confidence Gap. Users who then visit your site are often further along the funnel and more likely to engage meaningfully.

Your measurement framework must account for both effects. Are you losing informational clicks to summarized answers? Or are you gaining higher‑quality visits because an AI recommended your solution? Without segmentation and comparative time series, you can’t answer either.

What marketing leaders should do now

- Segment AI as a distinct channel. Implement GA4 channel grouping for AI/referrer patterns this quarter so future performance reports reflect AI influence rather than misclassifying it as direct or organic.

- Make server log analysis routine. Schedule quarterly audits to discover new bot agents and update your taxonomy. Server‑side analysis is the only reliable way to see which pages LLMs prioritize.

- Track multiple models. Monitor how your brand appears across ChatGPT, Perplexity, Claude, Gemini and other tools your audience uses. Single‑model checks give partial sight at best.

- Rethink attribution expectations. Treat AI as a channel with its own behaviour and conversion profile. Define what success looks like for AI‑seeded visits (e.g., higher engagement, faster qualification) rather than forcing AI into last‑click rules.

- Invest in automated monitoring where it matters. Start with inexpensive, high‑impact changes (GA4 grouping, log audits) and add purpose‑built visibility tools for scaled, multi‑model monitoring when needed.

The landscape is shifting quickly, and the numbers are significant. Research from Ahrefs suggests AI traffic has increased by 9.7x in the last year, and that growth will continue to shift how buyers discover and evaluate options. For considered‑purchase brands, the biggest risk isn’t that AI will replace existing channels, it’s that you’ll optimise channels you can see while the invisible funnel reshapes where real decisions are made.

If your analytics are still treating all “direct/organic” or un-attributed traffic as one blob, you’re very likely missing the impact of conversational AI and LLM-driven discovery. At 7DOTS we partner with brands in considered-purchase markets to close this insight gap and drive business growth.